Smart eyewear will revolutionize the way we view our world. But how can virtual information be projected directly in front of our eyes or the retina itself be used as a screen?

Enriching the real world with virtual information began with gaming applications and then spread to factories. Now it is about to become part of our everyday lives. Thanks to augmented reality (AR), factory workers, for example, can already have step-by-step assembly instructions projected directly in front of their eyes. Which means they always have both hands free to do their work.

The principle of AR could also simplify many things in our day-to-day lives. On a foreign holiday we could have translations of signs or menus projected in front of our eyes in real time, or route maps if we were on a cycling tour. “But the headsets currently available are not suitable for everyday wear. What we need are smart glasses that are small, stylish and affordable,” says Stefan Morgott, Application Engineer at OSRAM Opto Semiconductors. And he goes on to explain why such glasses do not yet exist: “For this to happen, the technology has to be made considerably smaller. And its efficiency has to be much greater.” This is not an easy task because the virtual display of information is based on a highly complex system of sensors, optics and projection.

The information must be adapted to the movements of the wearer of the glasses in terms of perspective and overlaid on what the person is actually seeing in the real world. A computer detects the environment using cameras, GPS or sensor data and selects the information to be displayed.

Doubly virtual

There are various ways in which virtual images can be generated in front of the eyes. Stefan Morgott and his team are working to find the smallest and most efficient solution. “It’s neither a monitor nor projection in the usual sense. It is doubly virtual – a virtual image suspended in space,” explains Morgott. Depending on our age, our eyes can only focus on something that is at least ten centimeters away. For the virtual image to be displayed in the correct size and in the correct focal plane in the real world, it has to be projected.

“There are very different concepts for achieving this, namely passive or active light-emitting microdisplays or a scanning mirror. The solutions currently available are either too large, have too low a resolution or luminosity, are not efficient enough or are simply not ready for the market. That is why we are currently relying on laser beam scanning,” says Morgott, by way of explaining the choice of visualization technology. With lasers, the projector attached to the eyewear frame can be made super small, lightweight, bright and energy-efficient. This makes it very attractive for the consumer market.

The retina as a screen

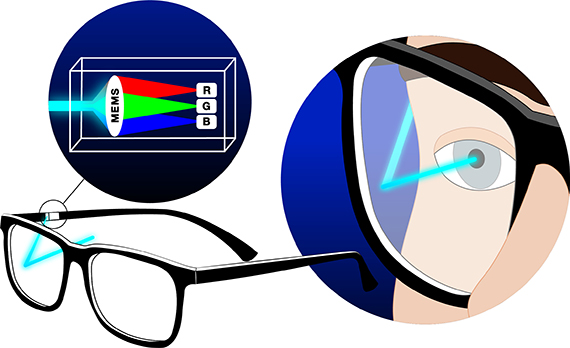

But special solutions require special light sources. The idea was to combine the three colors of red, green and blue in one laser package to make the display system as small as possible. Morgott and his team have now made the crucial breakthrough – combining three discrete R/G/B lasers in an RGB laser package.

Guided by a MEMS microscanner, which is attached to the eyewear frame, the laser beam passes through the pupil and hits the lens at different angles. The lens focuses the beam on the retina where the laser builds up a virtual image pixel by pixel. Since only one laser beam is involved, the display system is very small. And the output of the laser is so low that there is no risk to the eye.

Only a laser is able to “paint” directly on the retina, and very little light is needed since the beam is targeted directly at the eye. All other display systems emit light in all directions – and a proportion of that light is unused.

The person sees the image, such as an arrow, a symbol or text, as if it were right in front of them. Large areas within the field of vision must remain clear. A benefit of laser technology is that the beam allows a sufficiently clear view of the real world.

A promising combination

OSRAM has almost ten years of experience in a wide range of optical semiconductor technologies for augmented reality. Collaboration between lighting experts and sensor specialists now enables even more of the building blocks of AR systems to be covered. “AR glasses will be the next generation of hardware in consumer electronics,” says Morgott with conviction. “By combining forces with sensor specialist ams, we can offer such things as 3D sensing for smart glasses in addition to lighting and display technologies.”

Eyewear equipped with a special 3D sensor can present the real world as a three-dimensional model and place virtual information in it – resulting in a completely new viewing experience.

Light sources from Osram and sensors from ams are already being used in eye tracking applications in which a laser beam is guided by the movement of the eyes. This technology can be used for human-computer interaction: moving a cursor by moving your eyes and then blinking to click. “Keeping an eye on the future” therefore has a dual meaning for our new combined company.