<span>The gap between man and machine is dwindling. The physical and digital worlds are merging. How will we interact with machines that are becoming increasingly intelligent in the future? Will there even be a perceptible human-machine interface?</span>

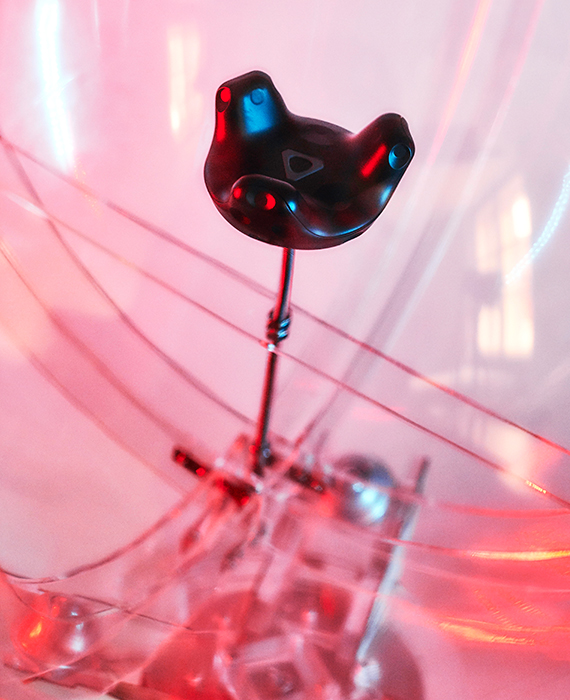

Andreas Butz rotates a transparent plexiglass orb the size of a beach ball. A sensor in the middle captures the movement by means of laser beams. The movement is then transmitted one-to-one and incorporated into the virtual reality of his VR glasses, where the plexiglass orb is visualized as a globe. What might sound a bit like something out of the science fiction films of the 1970s is, in fact, highly topical: The Institute of Informatics in Munich is doing nothing less than exploring the future of human-machine interaction.

Butz takes the VR glasses off and leans back in his chair. “The technology has to fade into the background and leave space for the application. The goal is to reduce the points of friction between man and machine – like in this test setup. The lightning pace at which computing power and artificial intelligence (AI) are growing opens up many new opportunities for that – but we lack an overarching operating concept.”

The technology must become invisible

Professor Andreas Butz is head of the Chair for Human-Computer Interaction at the Department for Informatics at the Ludwig Maximilians University (LMU) in Munich. He is also the spokesman for the Scientific Advisory Board at the German Research Center for Artificial Intelligence.

His long-held conviction is that computer technology has to take a back seat to a far greater extent. Future interfaces should engage with people in a far more natural way where they are – in our everyday world, with all our senses. That’s why the connection to the digital universe in the current test setup is a very real one you can touch and feel.

Approximating natural forms of communication

Butz is certain: Future concepts will unite gesture and voice control with other forms of interaction especially haptics, i.e., direct touch. “The sense of touch is still underrated when it comes to human-machine interaction. It only occurs in the shape of vibration at the interface. But haptics is a key part of our physical world. Reducing everything to our sense of vision is an unnecessary restriction.”

The LMU’s scientists are thus grappling with how to integrate haptics in virtual reality. For example, they connect a real and a virtual globe by means of a multi-touch surface scanned by a laser. Users can control the virtual reality with the real, physical globe, i.e., touch it at the same time, and so interact more efficiently. “It’s an approximation of our natural form of interaction. When you hold a new object in your hands, you grasp it faster in the truest sense of the word,” is how Butz’ colleague David Englmeier describes the test setup. “In future, any desired abstract objects can be simulated electromagnetically or by means of ultrasound – they’re not real like this globe, but you can still feel them, and can complement gesture control, for example.”

According to Butz, pure gesture control by means of light sensors can, however, be implemented well where sporadic interactions in a defined space, such as in a car, are involved. But, Butz notes, gesture control has one big limitation: the issue of feedback. “We need signals for ‘I’m listening to you’, ‘I’ve understood you’, or ‘I’ve recognized you’. In our world of objects, we receive that feedback everywhere, but the issue is still unresolved when it comes to gesture control.” Haptic control solves that by means of active feedback, such as vibration, touch, or pressure.

But will we even need a “sensual” interface in the future? Couldn’t we control machines directly with our thoughts? That’s a long way off, according to Butz: “Research related to recording brain activity is fascinating, but not suitable for everyday application as things currently stand. At present, it’s not good for much more than steering things roughly to the right or left or selecting initial letters.”

Redefinition of the human-machine relationship

Yet artificial systems are becoming more and more intelligent and are being complemented by sensors. “For the first time, the computing power and the technological prerequisites exist for artificial intelligence to be used in everyday life,” says Butz.

He adds that AI will have the greatest impact on our living environment in the future. Applications based on neural networks are already suitable for everyday use, whether in search algorithms, autonomous driving, or image detection. “AI tries to replicate neural networks and is even better than humans in some areas. Think of image detection. Its accuracy surpasses that of humans in some cases,” explains Butz. And the fallacy that machines only respond is a thing of the past. Butz feels certain that interaction will move in the direction of partnership: “Robots equipped with artificial intelligence as the next stage in the human-machine interface will no longer be mere menial assistant in the future. They will learn how to do skilled activities.”

Equals?

Team play with robots raises the question of trust and who will be the boss in the future. “Artificial intelligence mustn’t be a black box. Calibrated, not blind, trust is needed. As is an awareness that a robot is far from being intelligent just because it does or says a few smart things,” states Butz. The professor admits that he initially underestimated the importance of emotional intelligence in robotics. “I used to think that was impossible. But ever since I’ve been working closely with psychologists, I understand that artificial intelligence does not have to understand the world right down to the very last detail. For many application areas, it’s enough to simulate a certain depth. As a result, robotics can be used in many areas where superficial empathy is sufficient.”

Information that takes on a shape

So will we humans interact in future with machines in as almost a natural and matter-of-fact way as with other people? “Yes,” says Butz. “And that will be all the more natural the more they engage with us in the real world, with all our senses and social habits.” So not a reduced chain of zeros and ones, but information that takes on a shape – the “human” machine.